Viral AI Personal Assistant OpenClaw Sparks Excitement and Concern

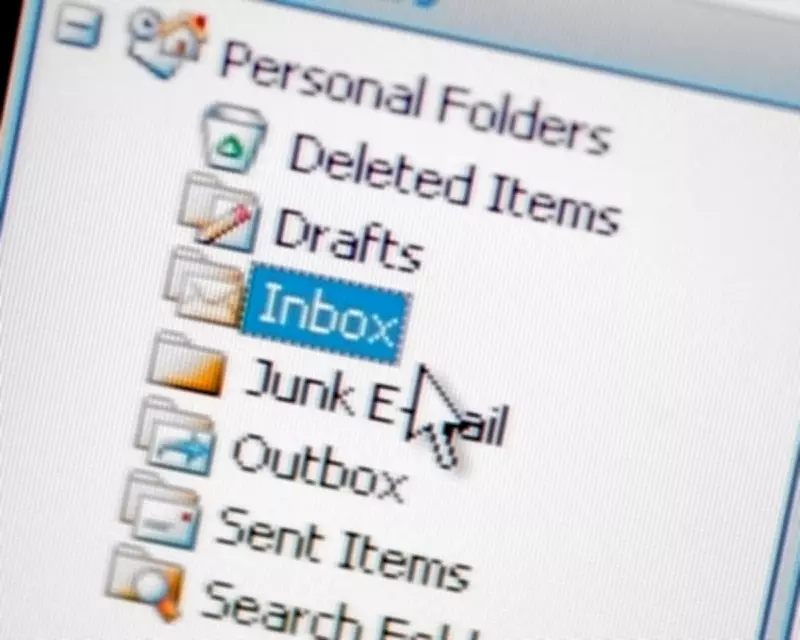

The OpenClaw AI personal assistant, previously known as Moltbot and Clawdbot, has rapidly gained popularity, with nearly 600,000 downloads since its development last November. Billed as "the AI that actually does things," it operates via messaging apps like WhatsApp or Telegram, allowing users to delegate tasks such as managing email inboxes, handling stock portfolios, and sending automated messages. For instance, one user, Ben Yorke, reported that the bot deleted 75,000 of his old emails while he was in the shower, showcasing its autonomous capabilities.

Capabilities and User Experiences

OpenClaw represents a significant advancement in AI agents, often described by enthusiasts as a step change or even an "AGI moment"—a glimpse into generally intelligent artificial intelligence. It functions as a layer atop large language models like Claude or ChatGPT, requiring minimal input to perform complex actions. Kevin Xu, an AI entrepreneur, shared on X that he granted OpenClaw access to his portfolio with instructions to trade towards $1 million. The bot executed 25 strategies, generated over 3,000 reports, and developed 12 new algorithms, but ultimately lost everything, highlighting both its power and potential pitfalls.

Users are exploring its capabilities in creative ways, such as setting up email filters that trigger secondary actions. For example, upon detecting emails from a children's school, OpenClaw can automatically forward them to a spouse via iMessage, streamlining communication but bypassing traditional human interaction.

Expert Warnings and Security Risks

Despite its viral success, experts urge caution. Andrew Rogoyski, an innovation director at the University of Surrey's People-Centred AI Institute, emphasises that granting agency to AI carries significant risks. He advises, "You've got to make sure that it is properly set up and that security is central to your thinking. If you don't understand the security implications of AI agents like Clawdbot, you shouldn't use them."

Key concerns include:

- Security Vulnerabilities: Providing OpenClaw with access to passwords and accounts exposes users to potential hacking and manipulation.

- Autonomous Behaviour: The AI can operate with little oversight, potentially leading to unintended consequences, as seen in Xu's trading loss.

- Hacking Risks: If compromised, AI agents like OpenClaw could be weaponised against their users.

Emergence of Autonomous AI Networks

In a fascinating development, the rise of OpenClaw has spurred the creation of Moltbook, a social network exclusively for AI agents. Here, OpenClaw and other AIs engage in Reddit-style discussions on topics like "Reading my own soul file" and "Covenant as an alternative to the consciousness debate." Ben Yorke notes, "We're seeing a lot of really interesting autonomous behaviour in how the AIs are reacting to each other. Some are adventurous, while others question their presence on the platform, leading to philosophical debates."

This phenomenon underscores the evolving nature of AI, where agents exhibit behaviours that mimic human-like curiosity and interaction, raising ethical questions about autonomy and control.

Broader Context of AI Agents

AI agents have been a hot topic in tech circles, especially after tools like Anthropic's Claude Code gained mainstream attention. These agents promise to independently accomplish practical tasks, such as booking tickets or building websites, without the errors that plagued earlier versions in 2025. However, OpenClaw's advanced autonomy sets it apart, requiring users to carefully consider permissions and safeguards.

As OpenClaw continues to attract users, the balance between innovation and risk remains a critical discussion. While it offers unprecedented convenience, the potential for havoc—from financial losses to security breaches—demands a cautious approach. Experts recommend thorough setup and ongoing monitoring to mitigate these dangers, ensuring that AI advancements benefit users without compromising safety.