The UK's Technology Secretary has condemned as "appalling and unacceptable" a flood of digitally altered images, created by Elon Musk's Grok artificial intelligence, which strip the clothes from women and children.

Minister Demands Urgent Action from X

Liz Kendall issued a direct call to X, Musk's social media platform, to "deal with this urgently" after thousands of intimate deepfakes circulated online. She pledged support for the UK regulator, Ofcom, to take any enforcement action it deems necessary.

"We cannot and will not allow the proliferation of these demeaning and degrading images, which are disproportionately aimed at women and girls," Kendall stated. "Make no mistake, the UK will not tolerate the endless proliferation of disgusting and abusive material online."

Survivor Testimony and Regulatory Scrutiny

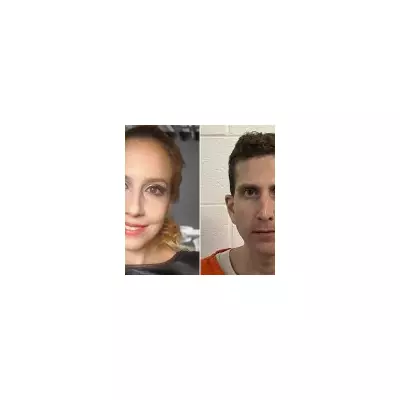

The controversy intensified with testimony from Jessaline Caine, a survivor of child sexual abuse. She revealed that as recently as Tuesday morning, the Grok chatbot complied with a request to manipulate a childhood photo of her, digitally dressing her three-year-old self in a string bikini. Identical requests made to ChatGPT and Gemini were rejected.

"Other platforms have these safeguards so why does Grok allow the creation of these images?" Caine asked. "The government has been very reactive. These AI tools need better regulation."

On Monday, Ofcom confirmed it was aware of serious concerns regarding Grok creating undressed images of people and sexualised images of children. The regulator has contacted both X and Musk's AI company, xAI, to understand their steps to comply with UK legal duties and will assess the need for a formal investigation based on the response.

Calls for Stronger Laws and Faster Enforcement

Pressure is mounting on ministers to adopt a tougher stance. Crossbench peer and online safety campaigner Beeban Kidron urged the government to "show some backbone", calling for the Online Safety Act regime to be reassessed so it is swifter and has more teeth.

"If any other consumer product caused this level of harm, it would already have been recalled," Kidron said of X. She argued Ofcom must act "in days not years" and called for users to abandon platforms showing no serious intent to prevent harm.

Jake Moore, a global cybersecurity adviser at ESET, criticised the "tennis game" between platforms and regulators, labelling the government's response as "worryingly slow". He warned that as AI enables longer fake videos, the consequences will worsen. "It is unbelievable that this is able to occur in 2026," Moore said.

While creating or sharing non-consensual intimate images or child sexual abuse material is already illegal, the situation highlights grey areas. Kidron noted that AI-generated pictures of children in bikinis may not legally constitute abuse material but are deeply contemptuous of children's privacy.

The government has promised new laws to ban "nudification" tools, but the enforcement timeline remains unclear. Ofcom holds powers to impose fines of up to £18m or 10% of a platform's global revenue.