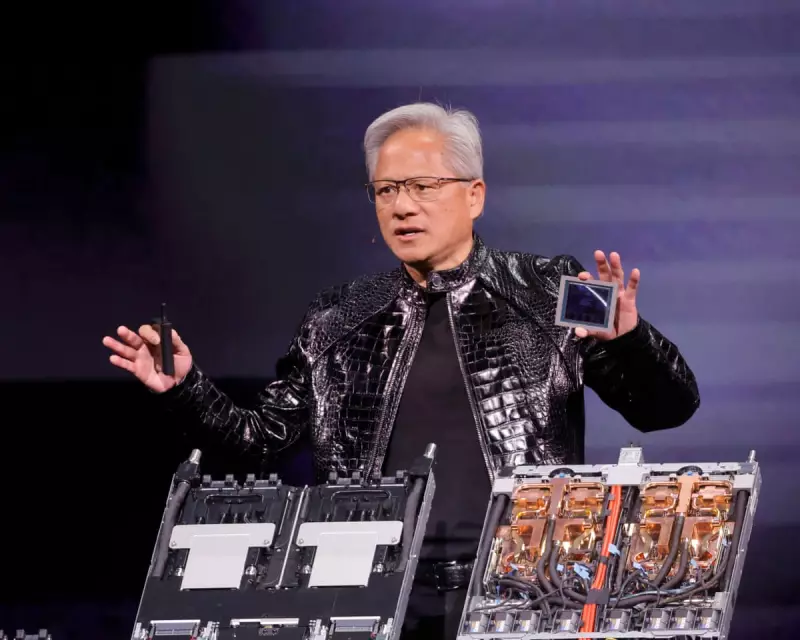

Nvidia has announced its next generation of artificial intelligence chips is now in full production, with the CEO promising a dramatic leap in computing power for AI applications like chatbots. The revelation came during a keynote speech by Jensen Huang at the Consumer Electronics Show (CES) in Las Vegas on Monday.

The Vera Rubin Platform: A Giant Leap for AI

Leading the world's most valuable company, Huang detailed the new Vera Rubin platform, which is constructed from six separate Nvidia chips. The flagship server will pack a formidable 72 graphics units and 36 new central processors. Huang demonstrated that these components can be linked into powerful "pods" containing over 1,000 Rubin chips, claiming they can improve the efficiency of generating AI "tokens" – the fundamental units of AI systems – by an impressive 10 times.

Despite this massive performance gain, Huang noted a key innovation: the chips utilise a proprietary type of data. Nvidia hopes this technology will be adopted across the wider industry. "This is how we were able to deliver such a gigantic step up in performance, even though we only have 1.6 times the number of transistors," Huang explained from the stage.

Facing Rising Competition in AI Inference

While Nvidia remains the undisputed leader in training complex AI models, it faces intensifying competition in the race to deliver those models to end-users. Rivals like Advanced Micro Devices (AMD) and major customers such as Alphabet's Google are developing their own solutions. Much of Huang's address focused on how the new Rubin chips excel at this task of "AI inference," which powers services for hundreds of millions of users.

A significant enhancement aimed at improving user experience is a new layer of storage technology dubbed "context memory storage." This is designed to help chatbots like ChatGPT provide faster, more responsive answers during long conversations and complex queries.

Beyond Chips: Networking, Software, and Strategic Moves

Nvidia's announcements extended beyond processing units. The company also promoted a new generation of networking switches featuring co-packaged optics, a technology crucial for linking thousands of machines into a single supercomputer. This move positions Nvidia against established players like Broadcom and Cisco Systems.

In the automotive sector, Huang highlighted new software, Alpamayo, which helps self-driving cars make path decisions and creates an auditable record for engineers. Nvidia plans to release this software more widely and will also open-source the training data. "Not only do we open-source the models, we also open-source the data that we use to train those models, because only in that way can you truly trust how the models came to be," Huang stated.

The CES presentation came against a backdrop of strategic manoeuvring. Last month, Nvidia acquired talent and chip technology from startup Groq, including executives who helped Google design its AI chips. While downplaying the direct competitive threat, Huang told analysts the Groq deal "won't affect our core business" but could lead to new products.

Concurrently, Nvidia is keen to demonstrate that its latest offerings outperform older chips like the H200, which have been permitted for export to China—a situation that has raised concerns among US policymakers. With the Vera Rubin platform set to arrive later this year, Nvidia is staking its claim to maintain its dominance in the fiercely competitive and rapidly evolving AI hardware landscape.